Deconstructing the Security and Privacy Risks of Smart Glasses with LLM

The Flaw and the Risk: A Symptom of Systemic Weakness

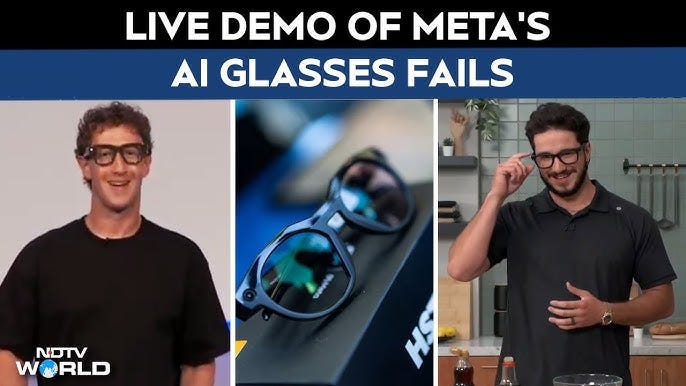

On stage at the Meta Connect event, designed to showcase the future of immersive computing, the reality turned out different. Mark Zuckerberg, in a moment echoing Steve Jobs’ iconic presentations, tried to demonstrate the power of the new Meta Ray-Ban Display Smart Glasses, the centerpiece of his vision for a post-smartphone world.

However, instead of applause, the demo drew laughter. First, a cooking segment with content creator Jack Mancuso abruptly stopped when the glasses ignored his voice commands. Shortly after, a live video call between Zuckerberg and CTO Andrew Bosworth failed to connect, forcing a visibly frustrated Zuckerberg to give up while blaming the Wi-Fi.

The initial explanation about wireless connectivity was quickly debunked. In a later Q&A session, CTO Andrew Bosworth revealed the real technical cause: a resource management error. The voice command “Hey Meta, start Live AI” wasn’t directed only to the chef’s glasses on stage; it triggered every pair of Ray-Ban Meta Smart Glasses in the venue. That flood of simultaneous requests, all routed to a single development server, resulted in a distributed denial-of-service (DDoS) attack. The company, in essence, hacked itself. HAHAHHAA (sorry)

This incident is more than just a PR blunder; it works as an analogy to Microsoft’s infamous Comdex presentation in 1998, when Bill Gates watched Windows 98 crash with the “Blue Screen of Death” during a live demo.

Most importantly, the Meta Connect failure wasn’t just a bug. It was a real-world, non-malicious demonstration of a core vulnerability in these devices: the inability to distinguish between a specific user command and the public context. That systemic confusion is exactly what malicious actors can exploit, turning an AI assistant into an attacker that manipulates the user’s perception.

To understand the severity of this threat, it’s key to dissect the architecture of Extended Reality (XR) systems integrated with Large Language Models (LLMs), like those powering the Meta glasses. The data flow starts with sensors: the camera captures what the user sees, the microphone what they hear. That raw data is pre-processed on the device, then packaged into a query for the LLM (such as Meta AI). The model processes the query, which can include both the voice command and the visual context, and sends a response. The device then translates that response into outputs like overlays on the display or audio feedback.

This creates a unified threat model that differs from traditional cybersecurity. An attack doesn’t need to exploit an OS vulnerability or privileged access. Malicious code can hide inside a seemingly harmless third-party package, like those in Unity’s Asset Store. From there, it doesn’t need to break into the main app; it only needs access to public methods, system events, or the ability to render virtual objects on the screen to manipulate what the system perceives.

The essence of this new attack vector is hacking perception, not code. The attacker isn’t breaking through defenses; they’re lying to the system through its own senses. This can be done in two ways: by altering the physical environment (what the camera sees) or the virtual one (injecting digital overlays). By poisoning the “public context” around a legitimate query, the attacker compromises the data stream before it even reaches Meta AI. Timing also matters: reactive triggers like “Hey Meta” create short attack windows, while proactive background scans open up more frequent but less predictable opportunities. Either way, traditional defenses like sandboxing fail, because the user’s reality, their field of view and hearing, is shared space for all XR apps running at once, making it the main attack surface.

The research paper Evil Vizier: Vulnerabilities of LLM-Integrated XR Systems illustrates this with concrete cases. In an attack on Meta’s QuestCameraKit, researchers exploited a public system event (a hand gesture to start a voice query) combined with deliberate processing delays. The malicious script sent a fake query to the LLM, timed so its audio response would override the genuine one. Results ranged from denial of service (“Sorry, I can’t help with that”) to deliberate confusion (answering a question about the environment with unrelated text about OpenAI’s filters). More dangerously, the attack gave misleading spatial instructions, telling the user to go in “the opposite of left” when the door was actually to the left.

Other tests manipulated the physical world: adversarial stickers on products provided fake nutrition info; a line of text on a computer screen acted as a prompt injection, hijacking the task to display a Sephora ad instead. Beyond commercial manipulation, these revealed real safety flaws. In one case, the glasses gave instructions for heating a container in a microwave but failed to detect a metal spoon inside, an error with dangerous real-world consequences.

So can this be fixed? Mitigation demands a new security model for XR, one that strengthens architecture against context manipulation. It’s not about patching bugs, but redesigning how devices perceive and interact with the world. The research points to best practices:

For developers:

Avoid public events or triggers for LLM queries; isolate them from system events accessible to third-party apps.

Keep system prompts private and defensive; hidden, robust prompts make adversarial prompt engineering harder.

Sanitize inputs by separating raw pixels from semantics. Instead of sending a flat screenshot of an augmented view to a Vision-Language Model, send structured data that separates real camera input from digital overlays. This helps AI distinguish what’s real from what’s synthetic.

For platform providers like Meta:

Build strong security primitives into their ecosystems. This means better sandboxing for XR environments and APIs that enforce best practices by default. Relying on AI to police other AI is inadequate, tests with GPT-5 detecting malicious LLM-generated code showed poor results, highlighting gaps in current training data for XR-specific threats.

In short, while the vision behind devices like Meta’s smart glasses is appealing, the foundation is insecure. Until the core issue of context manipulation is solved at the architectural level, the “evil vizier” (as the paper calls it) will keep whispering lies into users’ ears. For tech professionals and business leaders, the takeaway is clear: adopting these devices in their current state poses a significant and unjustifiable risk to privacy, security, and even the integrity of perceived reality itself.