🔞 Global fragmentation of age verification

Diverging laws, tensions between child protection and privacy, where does that leave us?

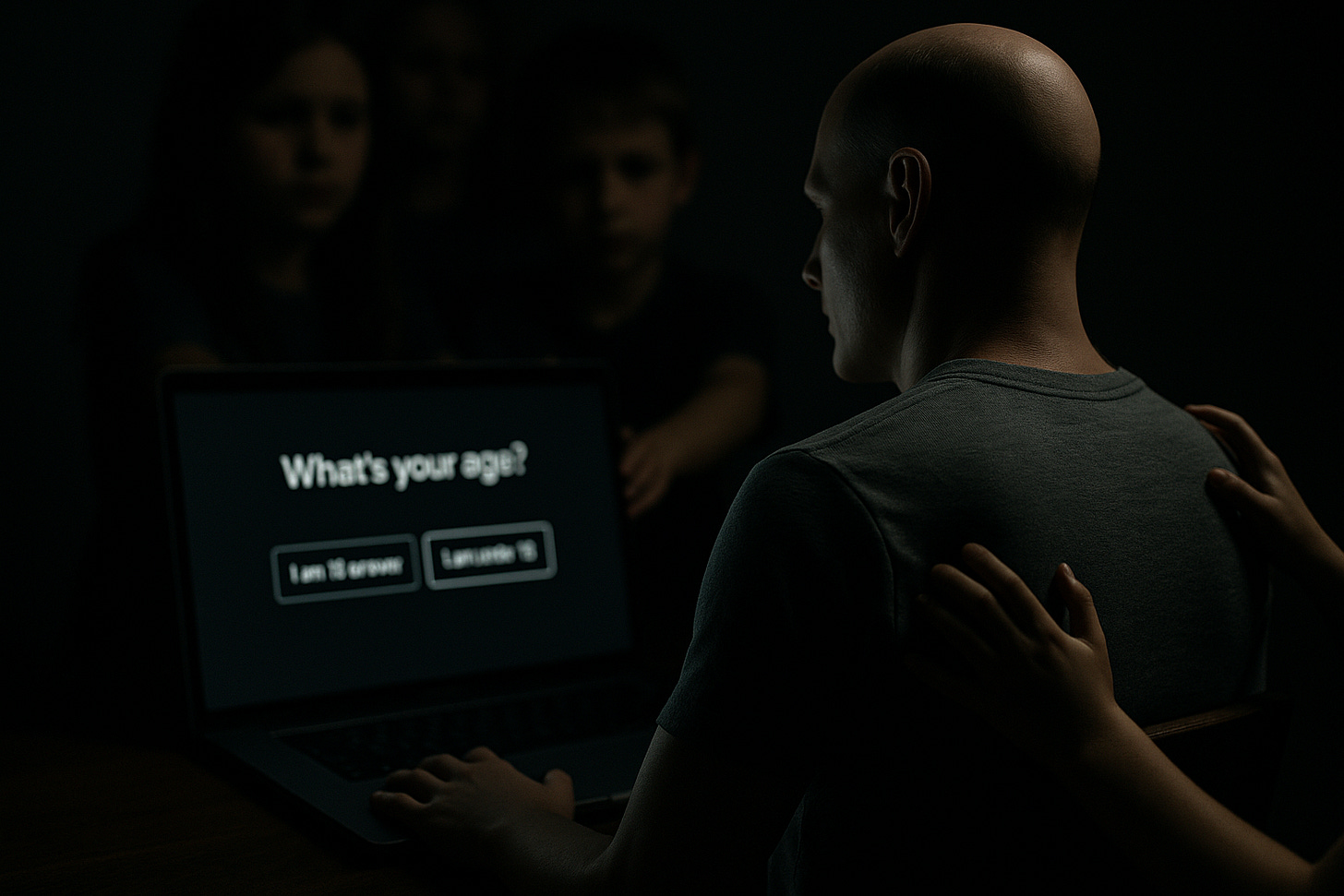

Everyone has seen Felca’s video or at least the fallout from it over the past week (at least in Brazil, I’m not sure how much global attention it got). But what about privacy and age verification in the online world? Platforms might not bear full responsibility, but are they really implementing strong enough safeguards?

In many countries, regulators are working to enforce age restrictions on digital platforms to protect children and teenagers. The result, however, is a fragmented regulatory landscape. Each jurisdiction takes its own approach, often clashing with privacy rights in the attempt to control user age.

Australia passed a law banning anyone under 16 from social media and requiring platforms to verify users’ ages. Noncompliance can lead to fines of up to AU$50 million. The government admits that teens will find ways around it but argues the measure is justified and shifts responsibility onto the platforms.

In the European Union, the goal is to standardize age verification without sacrificing privacy. In 2025, the European Commission launched a prototype that verifies whether a user is of legal age without revealing their identity—a kind of zero-knowledge proof for age. The solution relies on anonymization and data minimization, topics I’ve often discussed here on the blog, to protect minors without excessive data collection. After all, purpose limitation is the backbone of privacy laws. The EU is trying to strike a balance between child safety and privacy by design.

The United Kingdom has taken a middle-ground approach. Its Online Safety Act requires platforms to protect minors from harmful online content (though “harmful” is left vague) and to verify age when necessary. The law doesn’t mandate a single method; it allows multiple technologies, from AI that estimates age from photos to document checks. The only requirement is that measures be “highly effective” at blocking those under 18. This flexibility is meant to engage industry players, though skepticism remains about both effectiveness and privacy risks, especially given that no method today is 100% reliable.

In the United States, with no federal policy, each state legislates on its own. The result is a wave of lawsuits challenging age-verification laws for social media and adult content, often tied to parental consent requirements. Civil liberties groups and companies argue these laws violate the First Amendment, and several courts have already blocked them. In 2025, however, the Supreme Court temporarily upheld a Mississippi law despite constitutional concerns. The U.S. is left with a patchwork system caught between protecting kids and safeguarding digital rights (and that’s without even mentioning COPPA).

In Brazil, the response has been slower and more political. The ANPD only acted in 2024, ordering TikTok to fix its flawed age-verification mechanisms. While the LGPD includes safeguards for minors’ data, enforcement is weak. Anyone can log on to TikTok, search for dances or Roblox, and quickly get flooded with content from minors, often far from innocent. Meanwhile, bills in Congress propose banning kids under 12 from social media and requiring supervision until 16. Politicians have embraced child protection, often in reaction to media scandals, but I worry these are simplistic or opportunistic measures pushed forward just for visibility. Without technical planning and digital education, the risk is that rights will be undermined without truly protecting children, and the whole thing ends up going nowhere.

In short, child protection online has become a global priority, but coordination is missing. Each country is experimenting, from strict bans to anonymous verification, exposing the tension between the duty to protect and the right to privacy. How to balance child safety and online privacy remains an open question, and I don’t know the answer. Do you?