⚠️ The Invisible Risks of the Model Context Protocol (MCP)

Language models need context to work, but when that context goes beyond expected boundaries, what was once personalization turns into exposure.

I was running some tests with Java ecosystem components for AI integration, exploring how I could automate a marketing application using Langchain4j, a Telegram bot, and MCP connected to Apache Solr. I ran a few experiments with Spring MCP, which seems quite promising… anyway.

It’s simple to implement. But with all this ease, where are the privacy risks hiding?

That’s what we’re going to talk about today.

What is the Model Context Protocol (MCP)?

Before we dive in, since this is a technical topic and not everyone following Privalogy is from the tech world, let me explain it in my own way. Forgive me if you're a hardcore dev, I won’t go too deep here.

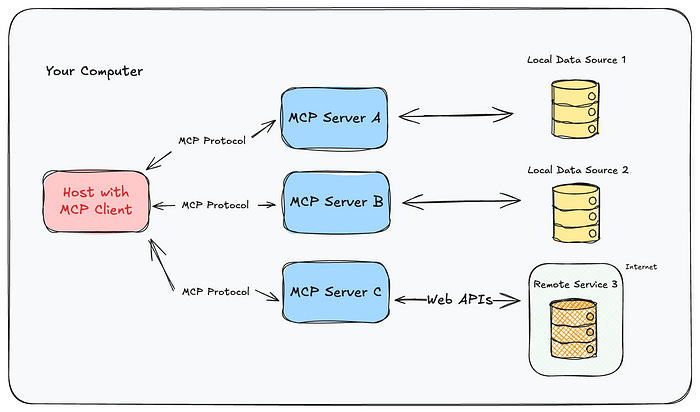

With the rise of intelligent agents, copilots, and assistants powered by LLMs, there came a need to standardize how different components like the user, the model, the tool, and the data source share the state of the conversation and the environment. That’s where the Model Context Protocol (MCP) comes in. It was proposed by Anthropic and other members of the AI Foundation.

MCP is a specification that defines how to represent, store, share, and transport conversational and functional contexts for language models in an interoperable way. Instead of building proprietary structures, companies adopting MCP can interoperate with different LLMs and tools while maintaining data flow consistency.

For example, an agent that starts a log analysis on one platform, retrieves files from Google Drive, and sends alerts via Slack can preserve the task’s state and the user’s history throughout the process, even when switching models or components. MCP standardizes this, promoting reusability and continuity.

If you’ve read my book Privacy for Software Engineers (which, by the way, will be on sale promo on Amazon on April 23 and 24 - shameless plug!), you know I like to explain technical concepts with a little storytelling. So here’s an analogy for MCP:

Think of context as a backpack your assistant carries. Inside, they put everything they see, hear, and learn while helping you. If you say "remind me to buy João’s medicine," they write it down. If you show a spreadsheet, they keep a copy.

Context is no longer invisible. It's the most valuable data you never meant to expose.

Now imagine that assistant gets replaced, and the backpack is handed over to someone new. If no one checks what’s inside, the new assistant will know about João’s medicine, your financial spreadsheet, and maybe even that email you dictated out loud. And if that backpack gets left on a park bench (like an open server)? Anyone could open it.

That’s what MCP does! it packages context so tasks can continue. But if not properly controlled, it becomes a suitcase full of personal data left out in public.

Where are the privacy risks?

Despite the fancy acronyms and “new concepts,” at the end of the day, it all comes down to system integration. Nothing in MCP or similar proposals is truly groundbreaking, it’s all about data exchange and interoperability, boiled down to the basics.

And anyone working with privacy and data protection knows that system integration, by nature, introduces risk. Whether it’s information security, confidentiality, compliance, or of course, privacy.

The problem lies in what gets included in the context. MCP relies on structured representations of:

Who is the user (or users)?

What is the ongoing task?

What data has been accessed?

What tools have been used?

What’s the conversation or action history?

What metadata is involved (like location or organizational identity)?

When this information is stored, persisted, or shared across different LLMs, real risks emerge:

1. Accidental persistence of personal data

When using MCP with persistent storage (for example, Elasticsearch to log agent interactions), personal information like names, emails, commands, URLs, or IDs might be indexed without anonymization, or at the very least, without proper masking. What should’ve been a temporary trace becomes a full behavioral database of the user. Not very different from how chatGPT logs work today.

Real-world example:

A hospital’s security team was logging LLM interaction contexts into an Elasticsearch cluster for auditing. Upon review, they found access tokens, patient names, and internal emails stored in the “raw context” field. No one had filtered what should be persisted. The result? A potential privacy incident, with sensitive data exposed to anyone running a search query.

2. Insecure context reuse

When context is serialized (via JSON, YAML, etc.) and passed between components, it can be reused by agents that shouldn’t have access to the full data. This is especially common in systems using Google Drive (or S3, Azure, etc.), where files and spreadsheets with personal and sensitive data are referenced in the context for decision-making, but access control is weak.

Real-world example:

A Retrieval-Augmented Generation (RAG) project used Google Drive documents to store context. Each model interaction produced a summary with names, goals, and referenced documents. This was saved as a “modeled” context. When shared with another agent to continue the task, that agent gained access to information that should’ve been private to a different team.

3. Context bleeding: when context leaks

By design, MCP contexts can be exported. This is helpful for task continuity across devices, but it also opens the door for data leaks. A model can receive context with “hidden instructions,” embedded data, or sensitive info disguised as notes. This can even be used as a covert exfiltration channel.

Theoretical example:

A sales agent uses a copilot that logs MCP histories of negotiations. An attacker inserts a link to a malicious file into the context. If another agent or model later processes that MCP without validation, the file could be opened or executed, leading to data exposure.

Two agents, two models, and no one knows who answered.

Let’s imagine a more relatable scenario. Picture a company that uses a virtual assistant named Helena to handle customer support via chat, answering product-related questions. This assistant operates using MCP and is orchestrated by a framework that dynamically decides which LLM will process each part of the conversation.

Here’s how it works on the backend:

For objective questions (like return policies), Helena uses ChatGPT (GPT-4).

For more technical explanations about how the product works, she switches to DeepSeek, a model better trained on technical data.

All of this is orchestrated through MCP, which encapsulates the conversation state and pending tasks.

Now comes a second agent: Camila, the internal BI assistant. Camila receives Helena’s “support summary” and the customer’s topics of interest, using the same MCP for continuity. But here’s the catch: Camila has no idea (and no way of knowing) which parts of the response came from ChatGPT and which came from DeepSeek.

The interface used by both agents treats LLMs like commodities. The context is passed via MCP, and the model is selected at runtime by a routing layer that doesn’t expose metadata about the source.

The problem:

If a critical error occurs, like a data leak or a compliance breach, no one will be able to trace which model was responsible for the specific response. That’s because MCP handled the whole thing as a single block.

The Model Context Protocol doesn’t forget. And neither will your users if their data leaks.

MCPMarket – Thousands of MCPs for you

On the MCPMarket website, you’ll find thousands of MCP components ready to plug into your systems. There’s everything you can imagine.

One of these components, for example, is the “Java Filesystem” MCP. It allows an LLM to interact with a server’s file system. Say you have a directory like C:\myfiles with hundreds of files, you can spin up a server using this platform, and with any compatible model, you can prompt and search through your files in different ways.

Sounds like magic, right?

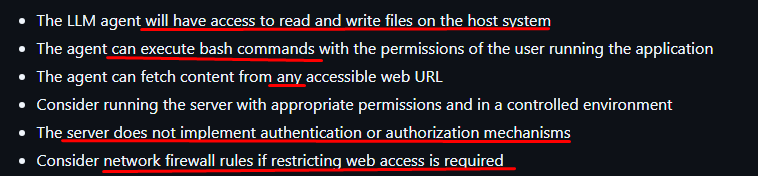

But take a look at the risks pointed out by MCP itself:

Now, will everyone integrating systems just for convenience pay attention to this? Let’s be honest? hardly anyone reads documentation. It’s all copy and paste, it works, and that’s game over. The problems show up later.

Alright, alright! I’ve probably convinced you there are real challenges and governance risks when working with MCP (or any integration between systems and LLMs). So how do we fix it?

Clearly separate contextual data from personal data

Create deletion and filtering policies before persisting or serializing context. Use context writers that remove or mask personal information, tokens, and sensitive data.Validate context before reuse

Never inject a context from another agent or model without explicitly validating its fields. Use context sanitizers and safe serialization schemas.Define a lifecycle with TTLs and limited scope

Contexts should expire. Once the task is complete, the context must be discarded. If retained for auditing, it should be separated from the live production environment.Access control for stored contexts (like in Elasticsearch)

If MCPs are persisted, use RBAC, encryption at rest, and field masking. Monitor suspicious queries and review which users can access the index.Beware of convenience features

The easier it is, the higher the potential risk.Avoid storing direct links to external sources

Never store direct links to Google Docs, spreadsheets, S3 buckets, or other resources in the context. Use IDs with identity-based resolution controls.Log and version every context in production

Every MCP context used in production must be auditable. Store hashes of the context and track which models or agents processed them, for audit trails and incident rollback.Create clear organizational policies for context use in agents

Define what can and cannot be included in an MCP. Train developers and analysts on the risks, and incorporate MCP into your AI governance framework.Assess the risks of each MCP component thoroughly

As I showed earlier, there are hundreds of MCPs out there, and not all are high quality or trustworthy. Some may receive data and send it off to clouds you’ve never heard of, or didn’t take the time to research. Most are open source. Study the code. Customize it. Don’t just use anything blindly.

Whether you’re an AI engineer (or context engineer… that’s a real job now), an LLM architect, or a DPO trying to understand how these agents work, remember this:

Context is power, and also responsibility.

If you enjoyed this content, subscribe to Privalogy. I try to share at least two pieces like this each week.

MCP opens the door to interoperability and task continuity in AI. But without strong privacy practices built from the start, that door can lead to serious consequences.

Are you building MCP servers responsibly?

Really informative! Also thanks for simplifying the tech-heavy parts, the explanation was super clear!

Thanks, I wondered what that was having seen it mentioned. I am a retired CS.